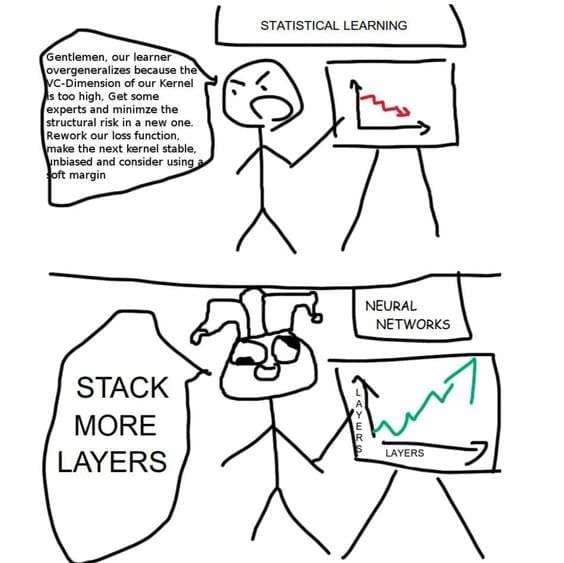

The 2 types of Data Science/Machine Learning guys you see at work...

In the world of machine learning, there are two distinct mindsets: classical statistical learning and modern neural networks. I’ve noticed that classical approaches feel like solving a complex puzzle—tuning loss functions, adjusting kern...